In Part 1, I detailed some of the basics of SenseTime, China’s leading computer vision company. And I argued it was basically a linear business model, not a platform. It reminded me of Adobe and traditional software companies.

But the economics of AI software companies at scale are still unknown. Especially when it is done via a mass production method like SenseTime. I ended Part 1 with the following two points:

- SenseTime is factory for mass producing AI models.

- It is very focused on building economies of scale.

But what are the advantages of scale for an AI business?

That’s a big question.

- Are the economics going to improve or deteriorate with increasing volume?

- Are the models going to get better and easier?

- Or are they going to become more difficult and expensive to maintain?

- Is competitive strength going to increase or decrease?

- Are we going to see feedback loops and network effects?

- Is SenseTime going to become dramatically more profitable and powerful as it grows?

- Is this a Google and Facebook situations where everyone underestimated what was going to happen as it scaled?

- Or is this an Uber situation where it ultimately had limited competitive strength? And the economics just never improved?

It’s hard to know because AI software is something new. And it is dramatically more complicated than the traditional software we see in companies like Adobe.

But here’s my best take (thus far).

What Are the Advantages of Scale When Mass Producing AI Models?

If SenseTime was a physical factory, we would know what happens when it gets larger (versus competitors). It would likely start to show lower per unit costs. This production cost advantage would follow from:

- Economies of scale in the major fixed operating and capital costs of production. There are a lot of fixed costs in operating a factory. Plus, there is the maintenance capex. So, per unit costs should be lower. This is CA11 in my 6 Levels of Digital Competition.

- A rate of learning advantage (i.e., experience effect) in the more complicated products. As cumulative production increases, staff get better and more specialized. Things do become more productive and efficient. In more complicated products, this can often be maintained as a cost advantage. This is CA15 in my 6 Levels of Digital Competition. Also, L in Smile Marathon.

- The company would also likely outspend its competitors on R&D and maybe marketing. These are more fixed cost economies of scale. The R&D spending could show up in superior products, greater efficiency in the factory and/or in lower prices. Fixed cost economies of scale can be used for offense or defense.

The above are all economies of scale advantages. They are cost advantages based on superior scale versus a competitor.

However, you can also have variable cost advantages. This can be from:

- Proprietary technology and intellectual property. This is CA6 in my 6 Levels of Digital Competition.

- Possibly from labor cost advantages and learning effect, if the product is complicated enough. This is CA7 in my 6 Levels of Digital Competition.

Ok. If that is a normal factor, which of those might apply to SenseTime’s AI factory? What happens when it has more customers, revenue, and/or production volume?

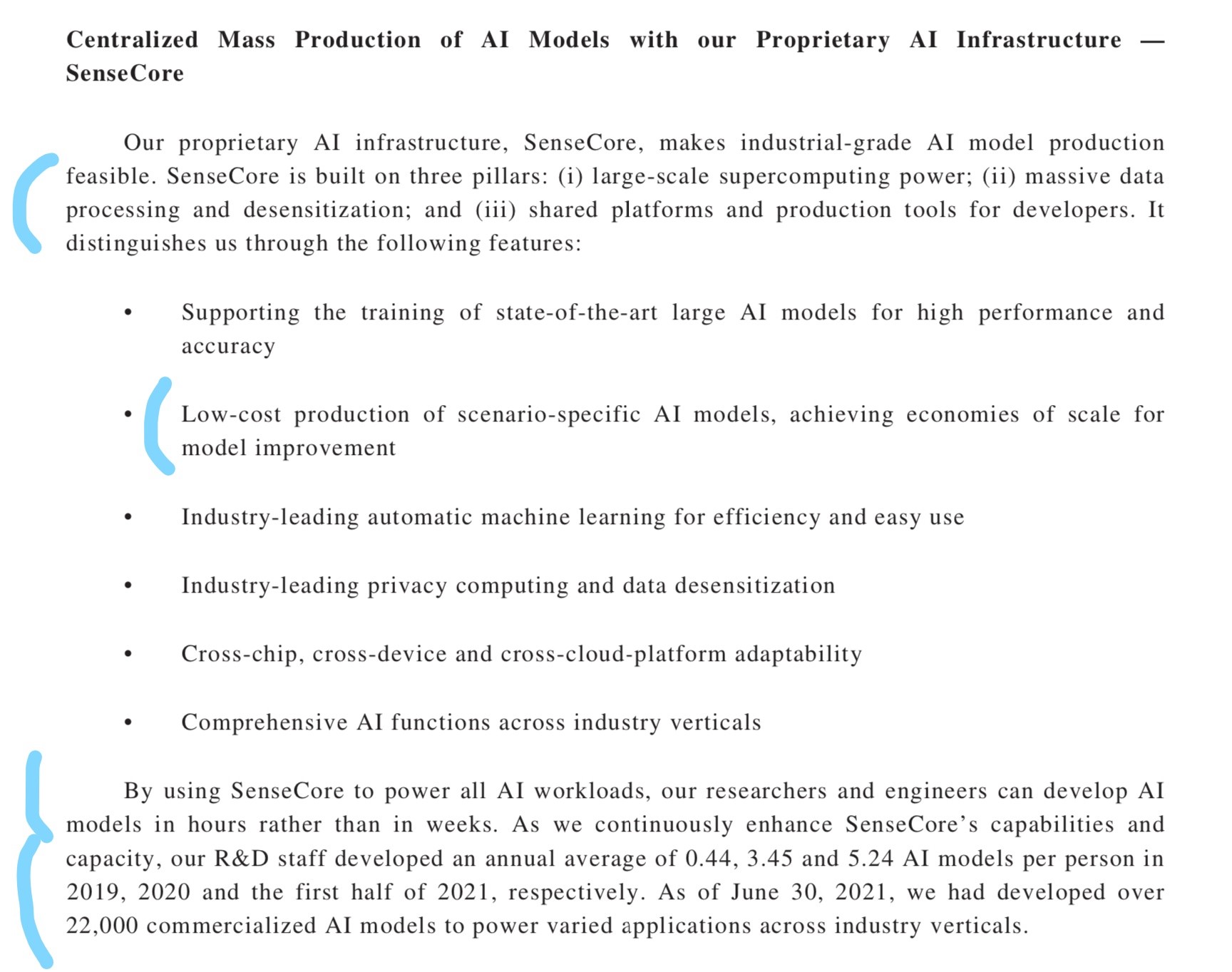

Well, we are definitely seeing #1 and #2. And SenseTime talks explicitly about this. The company states it wants to achieve economies of scale versus competitors. It wants to be able to create high-quality, industrial strength AI models cheaper and faster its competitors by getting scale. That means building a bigger data center having more computing power to train and run AI models. SenseTime’s massive, new-generation Artificial Intelligence Data Center (AIDC) is under construction in the Lingang New Area of Shanghai.

It is also doing #3. It is trying to use its size to outspend its competitors on R&D. That is pretty standard for tech companies. And you can see this adding to #4, where it is building a tons of intellectual property and proprietary technology.

You can see all of this in how the company describes its business.

Ok. But that was an easy first pass at this question.

And pretty much everything I just said could have been said about a factory or a traditional software company. But what is different about an AI software company at scale?

The real question is:

Does an AI Software Factory Have Increasing Feedback Loops With Scale? Network Effects? Data Scale Advantages?

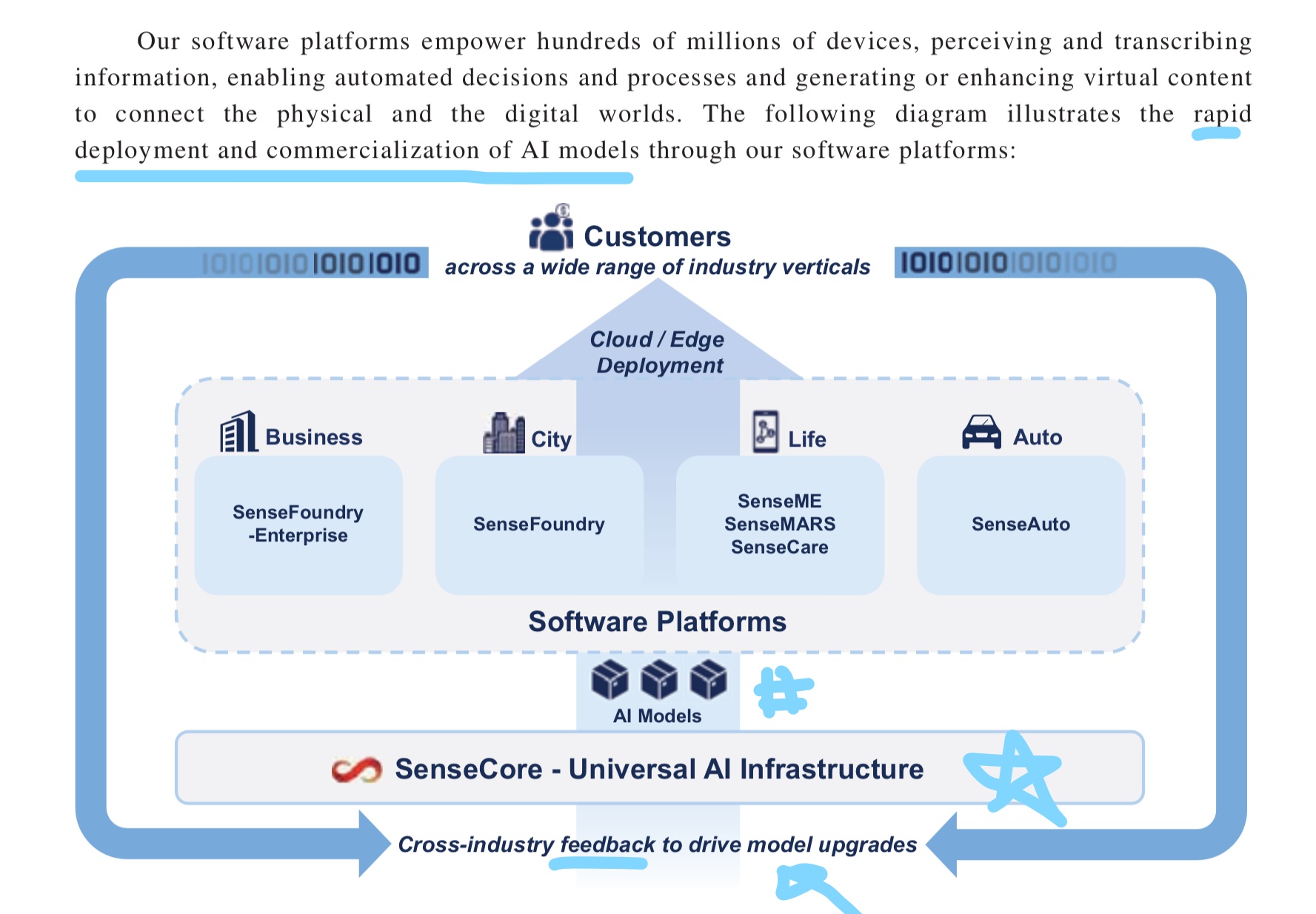

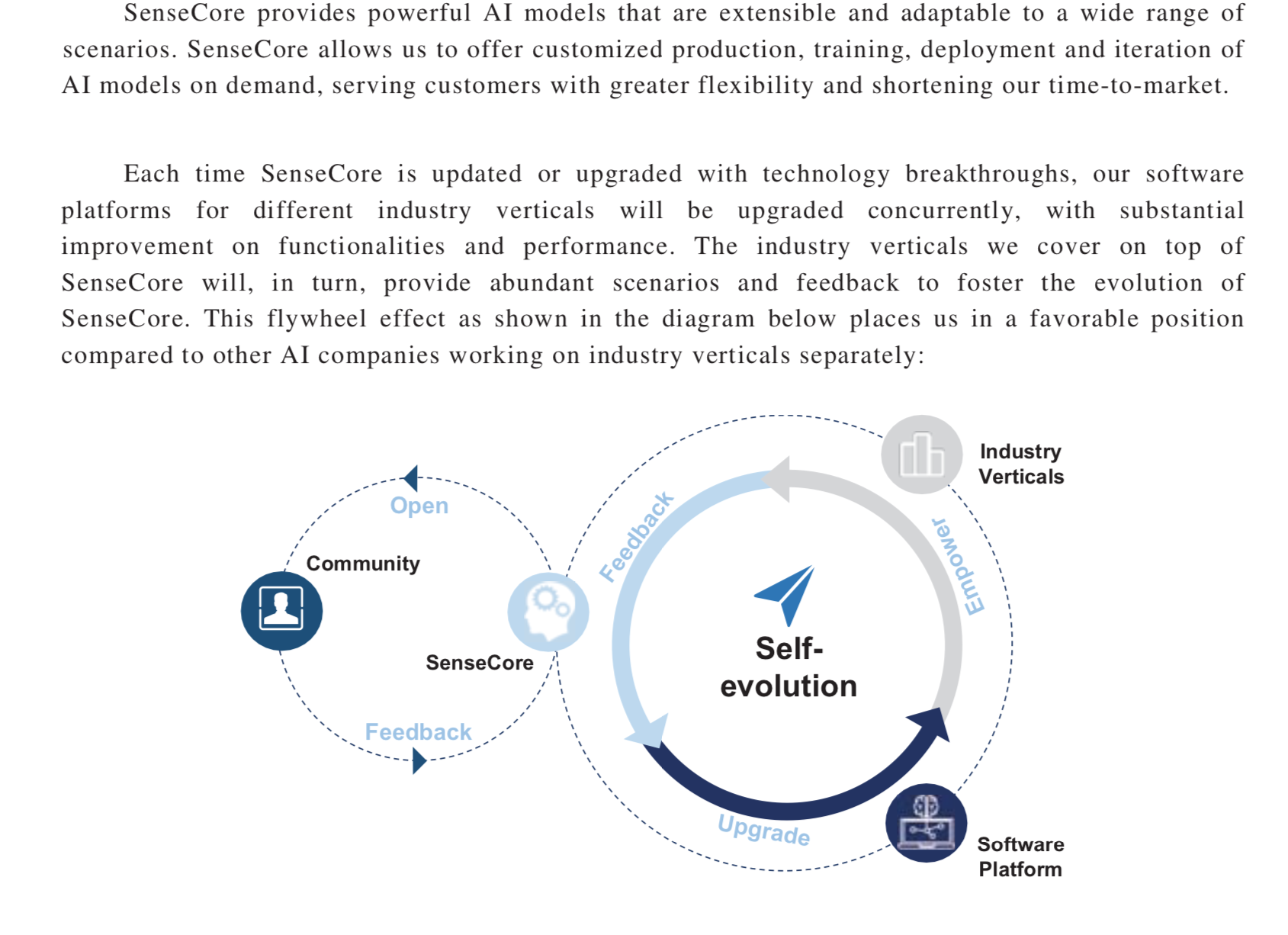

Look at the graphic below from SenseTime (from Part 1). Note the blue arrows the company has put in showing a feedback loop. The AI models being used by customers are feeding back to the factory – and this improves them and also trains new AI models.

Does that mean:

- More customers (companies, government bodies, smartphones) using the algorithms improve them?

- Does having more customers also improve the process for creating of new AI models? Faster? Cheaper?

- Is this about more customers or does this also happen when more algorithms are used per customer?

- Does this also happen when customers deploy more cameras and sensors?

- What about when AI models are applied to more and more use cases? Does the type of use case matter? A simple scenario vs. long-tail, rare events?

- Is this feedback mostly about more data flowing in real-time through deployed cameras and sensors?

- Is this feedback more about the data accumulating with the company over time?

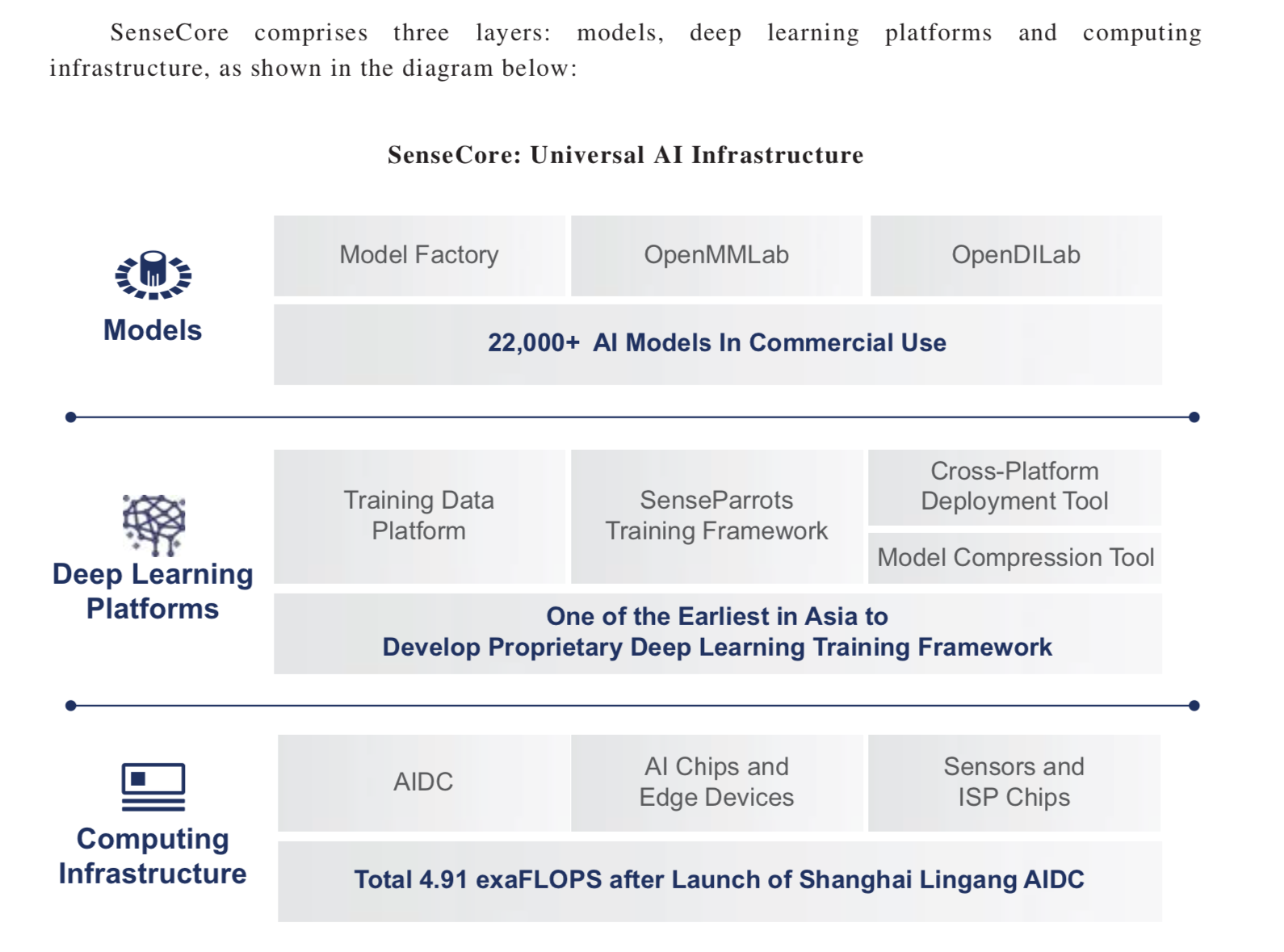

Look at the other graphic that showed SenseCore, the AI factory. Note the capabilities related to training data and training frameworks.

This raises additional questions:

- Does the factory get cheaper, faster and/or smarter when it is producing more AI models per day?

- Does the factory get cheaper, faster and/or smarter when it has a larger library of existing AI Models to draw on?

- When it has more current and/or accumulated training data?

The whole idea of a feedback loop (i.e., a network effect) gets pretty complicated with AI. I boil this all down to three main questions:

- Do existing AI models get better the more they are used?

- How much? Does it flatline? Does it keep increasing?

- Do existing AI models make each other smarter and better?

- Do existing AI models and the data they collect make additional AI models smarter? Do they make them faster and cheaper to train?

Here is how SenseTime describes its feedback loops.

SenseTime basically argues that with scale comes greater usage and data, which results in continual improvements (the loop on the right).

It also argues that developers will increasingly write for their ecosystem, which would make it a platform business model. I don’t buy that part yet.

Here are the numbers I am looking for:

- What is the time and marginal cost to produce an additional high-quality AI model today? How are these numbers changing with volume and over time?

- What is the average cost to maintain an AI model? What is the cost to ongoing training?

- What is the spectrum of performance by model type? Which types are getting more accurate? Which have flatlined in their performance? Which are decay over time and need retraining?

- How do these costs vary with different types of models?

- Industrial grade vs. basic models?

- Simple models vs. long-tails models?

- Critical models vs. “good enough” models?

Ok. That was a lot of me asking questions without giving answers.

I think we are actually going to see a wide spectrum of different behavior with different types of AI models. Some are going to going to increase and benefit from feedback loops. Many are going to become commodities.

Here’s my assessment of SenseTime’s business model (thus far).

Take a look at the bottom of the graphic. We can see the capabilities SenseTime is building (i.e., SenseCore) in yellow. This is how they generate their models. They are also starting to externalize this and sell their production capabilities as a service. That part is clear.

In the center, you can see AI Model Users. This a Learning Platform. I describe Google Search and Quora with the same one-sided platform. Learning Platforms are still a bit fuzzy to me as a model. But there is clearly a network effect happening, with users getting more value and utility with additional users. And the entire platform getting smarter (i.e., learning).

At the top, you can see the additional user group (Developers) potentially joining and adding an Innovation Platform.

That’s my working model (thus far). And there is one big network effect thus far (direct with more AI model users).

Ok. Last two points, which are less fuzzy. Here are some more solid conclusions for SenseTime.

Like Adobe, They Are Going for Integrated Bundles. That’s Great.

Over time, Adobe grew to a full suite of digital tools for creators. And that was a fantastic strategic choice. Because one of the competitive strengths of digital goods is the ability to bundle. Recall how Microsoft Office did this with devastating effect:

- In the 1990’s, there were multiple competing products for Word, Excel and PowerPoint. Each product cost about $500.

- Microsoft bundled its three products into Microsoft Office and priced it at $1,000. This was devastating for its competitors.

- These single product companies could not compete with Microsoft’s three products at a 33% cheaper bundled price.

- Plus, most of these competitors had no ability to offer the other products. They all disappeared.

- And this didn’t really cost Microsoft anything. Digital products have no marginal production costs. So, offering one product for free was lost revenue but it didn’t actually cost anything.

Adobe did the same bundling move but with lots more products. You can buy 1, 2, 3 or more Adobe tools at different bundled prices. You can buy their entire suite at a different price. SenseTime is doing the same thing with its AI models.

But the key word here is “integrated” bundles. The functionality of each product links in with the functionality of others. As a customer, the more products you buy, the better they all tend to be. For example, with Adobe you can buy Premier Pro to create videos. But if you also buy After Effects, you make those videos much better. The functionality integrates.

Bundles are a powerful competitive move. And integrated bundles are even better.

SenseTime is absolutely going to do this. They will bundle object recognition with facial recognition with license plate recognition and so on. Some models recognize people by their face. Some by their gait. Some predict traffic accidents. Some recognize frayed power lines above high-speed trains. Some recognize customer interest when window shopping. SenseTime has +22,000 models and the number is growing rapidly. There is a huge opportunity to bundle and integrate.

SenseTime is a Big Rundle. That’s Also Great.

When you combine subscriptions and licenses with product bundles, you get recurring revenue bundles, which NYU Professor Scott Galloway has called Rundles. That’s the business model SenseTime is building. It’s not really a concept. But it’s a funny word.

SenseTime has a great business model. The plan going forward is to:

- Get users onboard with more AI models and use cases – and with free versions.

- Upsell users to paid products.

- Continually cross-sell other products, especially with rundles.

- Build in switching costs and make sure customers do renewals.

***

Ok. that was a ton of theory. In Part 3, I’ll do some case studies for their projects. That should make all this more understandable. Plus, they are pretty cool.

It’s worth reading this one last page below from their filing. You can see the things I’ve mentioned all sort of laid out.

That’s it. Cheers, Jeff

———

Related articles:

- SenseTime and an Introduction to AI Software Economics (1 of 3) (Asia Tech Strategy – Daily Article)

- SenseTime’s Metaverse Ambitions. Plus, Cool Case Studies (3 of 3)(Asia Tech Strategy – Daily Article)

- 3 Lessons in China AI/ML from Artefact (Data Consultants and Digital Marketers)

- Adobe Inc. and the Power of Old School Software Economics (Asia Tech Strategy – Podcast 81)

From the Concept Library, concepts for this article are:

- Artificial Intelligence

- Computer Vision

From the Company Library, companies for this article are:

- SenseTime

Photo by Nick Loggie on Unsplash

——–

This content (articles, podcasts, website info) is not investment advice. The information and opinions from me and any guests may be incorrect. The numbers and information may be wrong. The views expressed may no longer be relevant or accurate. Investing is risky. Do your own research.