This week’s podcast is a summary of our visit to the Huawei AI Cloud exhibition in Shenzhen.

You can listen to this podcast here, which has the slides and graphics mentioned. Also available at iTunes and Google Podcasts.

Here is the link to the TechMoat Consulting.

Here is the link to our Tech Tours.

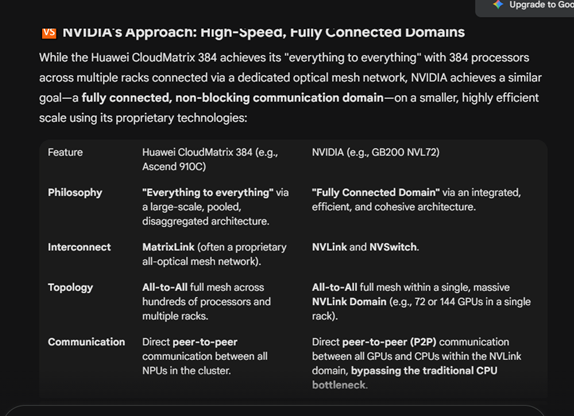

Here is a comparison of Nvidia and Huawei AI clusters (Gemini generated). And a picture of the CloudMatrix 384.

Here are the mentioned articles about AI Infrastructure.

- Understanding AI Infrastructure Part 1: AI Data Centers (Tech Strategy)

- Understanding AI Infrastructure Part 2: AI Compute is Different (Tech Strategy)

- Understanding AI Infrastructure Part 3: GenAI Operating Costs (Tech Strategy)

- Understanding AI Infrastructure Part 4: The Cost of Correctness (Tech Strategy)

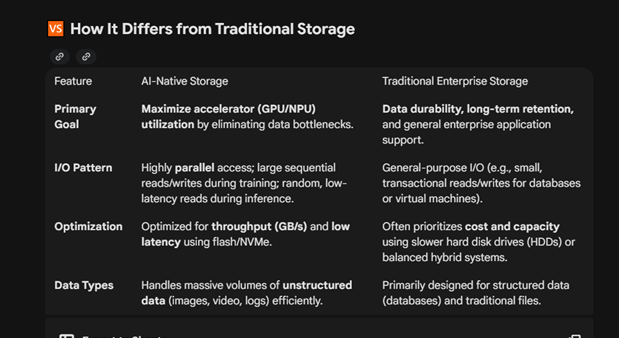

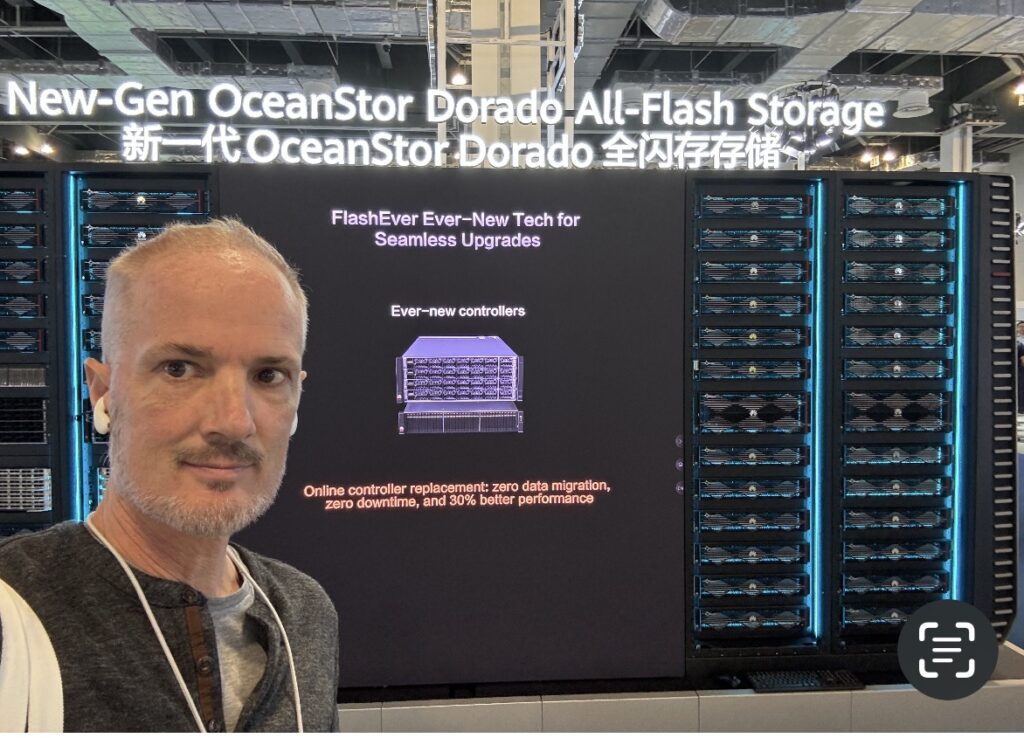

Here is a summary of AI-Native vs. Traditional Storage (Gemini generated). And a photo of Huawei’s OceanStor Dorada storage.

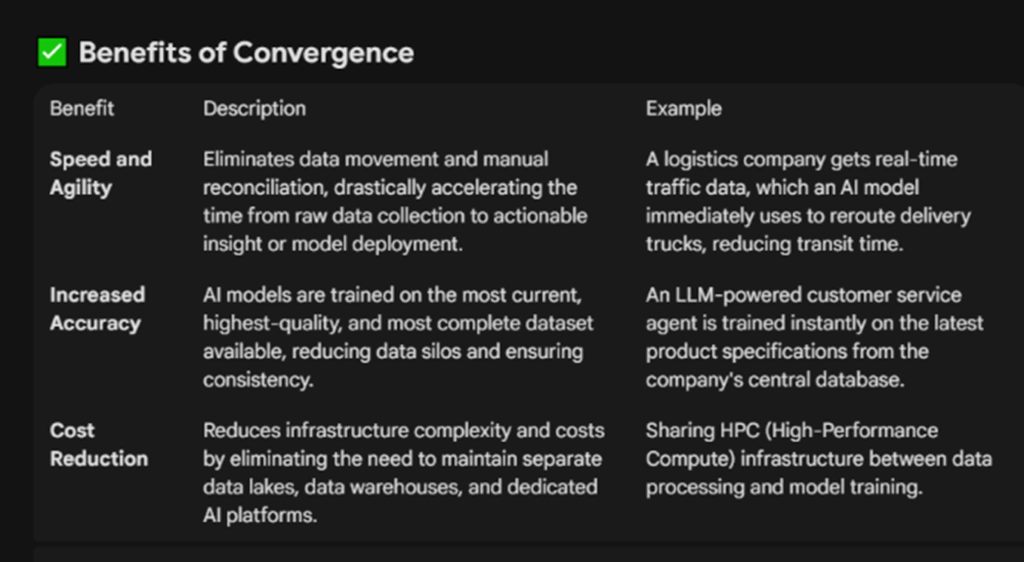

Here is a summary of AI-Data convergence. Gemini generated.

——-

Related articles:

- Two Lessons from My Visit to Tencent Cloud (1 of 2) (Tech Strategy)

- Tencent Cloud and Mini Programs Go International. Lessons from My Visit to Tencent HQ. (2 of 2) (Tech Strategy)

From the Concept Library, concepts for this article are:

- AI Infrastructure and AI Data Centers

From the Company Library, companies for this article are:

- Huawei Cloud

———-Transcription below

00:06

Welcome, welcome everybody. My name is Jeff Towson and this is the Tech Strategy Podcast from Techmoat Consulting. And the topic for today, an AI infrastructure update.

00:21

from our visit to Huawei. Now last week, me and about 67, 70 people, we were bouncing around Shenzhen, visiting some companies, doing a lot of lectures, guest speakers, really had a great time. Well, I had a great time. I think most people had a great time. And we also went to the Huawei campus in Shenzhen, which is really interesting, fantastic campus. mean, it’s really beautiful actually. And they have tremendous information in their exhibition halls and other things about what they’re doing.

00:51

which is a lot, but there’s one exhibition hall which is on hallway cloud. That’s my favorite one. I’ve been wanting to talk about this for, I went there about six months ago when it just opened, and I wasn’t really allowed to talk about it back then, but people are talking about the cloud matrix and their new chip architecture and all that now it’s all public, so I was going to talk about it, and I thought I’d go through sort of what

01:15

the update is from all of that, what I learned. And also, for those of you who were on the trip, I said, look, that was a lot of information very, very quickly. uh Tons and tons of info, slides, graphics, use cases, all of that. So, I said, it might be helpful if I go through my own summary of what a lot of your saw. So that’ll be it. So, it’s a bit of a summary. It’s a bit of an update for what’s the frontier of AI architecture coming out of China, more or less. And that’s going to be

01:45

for today. Should be fun. Well, I think it’s fun. Now let’s see standard disclaimer. Nothing in this podcast or my writing website is investment advice. The numbers and information for me and any guests may be incorrect. The views and opinions expressed may no longer be relevant or accurate. Overall investing is risky. This is not investment, legal or tax advice. Do your own research. And with that, let’s get into the topic.

02:10

Now, the timing on this is pretty good, because I finished up four articles over the last month or so about AI infrastructure, which was kind of a lot. Part one was about these big AI data centers they’re building, what those are about, which is really becoming.

02:27

the infrastructure of intelligence that businesses and others are tapping into. The second one, part two, was how AI compute is just different. So, the foundation, the core of everything, you know…

02:42

Compute being done by NPUs and GPUs in parallel form doing generative AI type, linear algebra, matrix multiplication types, mathematics. It’s just different than CPU based compute that we’ve seen on computers and laptops forever. So, the core is different. So, I talked about how that’s different and how that therefore sort of requires different architecture.

03:08

Part three, I started to get into the so what, which is the cost. That was really what I was trying to get at. You can’t really think up business strategy until you understand what these things can do and what they cost. So that was part three was sort of getting into the generative AI operating costs. And part four, the latest one was at the center of that cost question is what I called the cost of correctness.

03:37

How much does it take to create and maintain correct answers or generation of content in one of these models that’s within an app or a service? Because it turns out it can be very significant. It can cost a lot to create.

03:56

this sort of level of correctness, can take a lot to maintain it. You might need a lot of humans in the loop. So, the economics can look very different. It can look a lot more like a service business than a straight software business, which means lower margins, more people, things like that. So that was kind of what I was trying to get at. And I was pretty much done with what I wanted to say. I think I’ll have maybe one or two more.

04:18

But then this visit I thought would be a nice way to sort of wrap a lot of that together and review a little bit and sort of tie it together. So thought yeah okay let’s do this and hopefully that’s helpful for the people who were there as well. So, I want to sort of talk about four things that I saw in the visit and it was one of my favorite visits for the trip. I mean I was taking notes like crazy because I’m always looking for what’s new.

04:44

Like what are they talking about? They weren’t talking about a year ago. Where’s the frontier? And for me, there was really sort of four buckets that I saw going through the hall and listening to all the presentations and all that, which are basically, they talked a lot about AI compute, which is Cloud Matrix 384, which I’ll talk about. They talked a bit about sort of, I don’t know what the word for this is, sort of AI native storage. The idea of, you know,

05:13

Storage is not really that exciting of a topic. We don’t usually talk about sort of memory and how you store things and then the GPU or CPU draws on it. You know, it’s a subject. There’s flash drives and solid-state drives and all of that. Okay, it turns out AI native storage is really pretty interesting. And Huawei’s doing a lot in that area. And then related to that is what we call sort of the AI data convergence.

05:41

you know, what’s sitting on all this storage? Well, you got databases, right? And typical when we think about traditional data databases, we’re thinking like something that’s kind of like an Excel spreadsheet sitting on a solid-state drive. That’s the data, that’s the storage. You know, relational databases and so on. But once you move into AI architecture, generative AI architecture,

06:05

you know, the data system just changes tremendously. So, there’s this idea that’s floating around that.

06:13

to manage and make the databases work, you have to basically combine data with GPUs so that there’s sort of AI within the database itself that manages and cleans it and gets it ready to use by the central GPUs which do the generative AI. So, there’s this sort of data AI convergence that people are talking about. That’s number three. And then the last one is really just sort of uh data centers.

06:42

When you put all this together, you get the server racks, you get the cooling. I think Huawei has a very compelling global picture for this, which they call Cloud Ocean, Cloud Lake, Cloud Pond. Different levels of this sort of data being stored around the world and the computes may be close by if you have fast applications, or maybe the computes far away.

07:06

So, I’ll talk about those. Those are kind of my four and I was looking for updates on those things and then they had lots of use cases. Smart mining, smart transportation, smart banking, everything’s got the word smart in front of it now. But I’ll mostly talk about those three or four things. Okay, so let’s get to number one, which is the most exciting, the most interesting for me, which is basically Cloud Matrix 384.

07:30

This is the thing, I’d heard about this a year ago or so, and I couldn’t really talk about it, but a couple months ago they made announcements about what the Cloud 384 was, and yeah, it’s a big deal. This is, you if you’re looking for the answer to the question of how is China going to compete in frontier high level computing for generative AI without having access to Nvidia semiconductors?

07:59

This is pretty much the answer right now. There’s other groups doing stuff that’s similar. They’re not the only ones. But this looks like the approach. The architecture they’re using is basically getting in the range of being Nvidia level performance without having Nvidia chips.

08:19

Now they’re also working on high-end semiconductors, but most people would agree that they are, if the US is in three nanometers to four to five, you know, China’s more like five to seven, five to eight, something like that. So, you know, there are ways behind and there, now what’s going on behind the scenes? Do they have lithography machines? Where is SMIC in terms of foundry capabilities? Do they have a CUDA-like language that’s being used? Hard to know what’s true. A lot of its kept pretty.

08:49

tight-lipped. I don’t know. I suspect there’s a lot more going on there. We don’t know about it. But we’ll talk about what is public, which I think the Cloud Matrix 384 is a good example of that. And that was in Article 2, Part 2 for understanding AI architecture. I talked about how they had sort of actually, no, was number 1 and number 2.

09:11

what this includes, how many chips are involved, how many chips per rack. And basically, when you add up all the chips within this, what they’re called super cluster, uh well, it’s actually not quite a super cluster, but anyways, when you add this thing up, you get 384 chips that are all working closely together in a cluster. Hence CloudMatrix 384. So, what’s the architecture? Basically,

09:36

it’s going from sort of a master slave architecture to an everything-to-everything architecture. Traditionally, the way the compute has done is you have uh really a CPU at the center.

09:50

And that’s kind of the choke point. You can think about it like, you know, that’s the center of a radius or the center of a wheel. Everything goes into the center. And then from there, the CPU will send some to other GPUs and other things. But it kind of looks like a wagon wheel with the CPU at the center. When you go to everything to everything, basically every chip can talk to every other chip directly, pretty much.

10:16

So, you’re wiping out these choke points, these bottlenecks that can hit your latency, it can hit your bandwidth, because the whole point of generative AI is you have to have tremendous data flowing all the time in tremendous volume to get it to work. And you have to have…

10:36

Tremendous amount of compute the flops are very high well rather than going into one chip all the chips work together Because they’re all connected to each other so you can sort of allocate Compute from all over the cluster to whatever problem you want you can all cut all of it a little of it But it all becomes very dynamic in terms of data flow bandwidth and especially allocation of compute When everything’s connected to everything dynamically

11:05

Now everything I just said is also true for how Nvidia is doing their pods and such. They have a similar architecture. Both are a departure from sort of traditional architecture, which is more sort of hierarchical command control. The difference is with, let’s say, Huawei and Nvidia is Huawei is using a lot more chips because their chips are less powerful.

11:28

Nvidia’s smaller number of chips uh smaller cluster so you know it’s basically a bigger version of that and here’s a little bit more on that here’s I was looking up sort of definitions of this

11:41

Here’s a good definition I heard. The whole sort of concept of, look, you have very intensive AI workloads in large language models, both for the training and the inference. So, it’s a move away from traditional hierarchical computing architecture more to a peer-to-peer, fully pulled and disaggregated system. There’s no central point commanding everything. So, some of the characteristics, as mentioned, it’s disaggregated and you can basically pool

12:11

resources. So instead of a CPU sort of controlling things where you’re going to have limitations on memory bandwidth and network bandwidth, no, you can basically disaggregate the cloud matrix into separate pools and you can sort of allocate everything as you see fit, but the node boundaries are no longer fixed. So, everything’s pooled and you can kind of do dynamic allocation.

12:38

Again, peer-to-peer relationship, pretty standard. For the 384, you’re talking about the Ascend NPUs. They have a couple. uh

12:51

technology of computer architecture, one for their traditional compute and one for GPUs, generative AI. That’s the Ascend uh series of chips. Okay, so they connect all their NPUs with basically high speed, everything connecting to everything communication, which they call their full mesh. So, if you look up the Huawei stuff, you’ll see that a lot. It’s uh a full mesh and they use kind of a matrix link architecture. And basically, any NPU can connect with any other NPU.

13:21

directly and do what’s what and that gets you large-scale parallel computing which is a big deal. So why is that better? Well, when you get rid of the bottlenecks everything’s much more efficient. The whole system can scale up a lot easier. This is actually kind of a big problem with generative AI computing. You know…

13:44

Traditional computing, the workloads, they move, but they don’t move erratically. They don’t double or triple in seconds. But you send certain questions to an LLM, suddenly there’s a big spike in the compute necessary. So, you need a lot more elasticity in your ability to compute. Well, if you have this peer-to-peer mesh type network, it’s very easy to scale up the compute you’re allocating second by second.

14:14

So, it scales very elastically, that’s kind of a big deal. And then you basically just get more uh utilization from the chips you have. Instead of waiting and waiting for workloads to be processed, you can queue them up and sort of allocate them very dynamically, which is more efficient, but it also gets you better utilization of your chips overall, which is effectively cost.

14:38

So, I actually looked up the difference between sort of the Huawei Cloud Matrix, which is there, they’re Ascend 910C chips, 384 of them. Well, actually there’s other chips in there. They’re not all Ascend 910Cs. And if you compare that to Nvidia, the NVL72, which is sort of a similar connected architecture, they’re pretty similar. The switches are a bit different. Matrix Links is what connects for Huawei. The NVLink and the NVSwitch is what Nvidia uses.

15:07

They’re both all to all full mesh, but basically one is smaller than the other. I didn’t see too many other differences and they both bypass the traditional CPU bottleneck. okay. The other benefits of this, basically your flops go way up a lot, a thousand, 10,000-fold. Flops are your floating-point operations per second. It’s basically computed speed. How fast can it do the math?

15:36

especially complicated math. The other thing to keep in mind with the cloud matrix is okay, your compute goes way up, so does your network bandwidth.

15:46

Basically, the communication within the processor, all of that is faster, lower latency, fine. Actually, the part I thought was interesting was you also get sort of a big bump in memory bandwidth. There’s communication between the GPU, CPUs, all that. Okay, so that’s network bandwidth. But you also get communication between the processing power and where the data is stored, so your memory.

16:11

And typically, you have sort of high bandwidth memory on each chip. So, the chips have memory on them themselves, high bandwidth. And then you also have sort of an aggregate system wide menu, memory, which tends to be a lot of flash memory, not as much solid state because just the nature of the compute. So, you can sort of pool and disaggregate the memory as well. So, for those of you who are on the tour, you might have noticed that like.

16:39

They had a couple benefits they’d listed on their slides, and it was basically those. Big increase in FLOPs compute power, the network bandwidth jumps, and then the memory bandwidth jumps. The words they used were things like, you can scale out much faster, add more compute resources, you can scale up, you get higher performance inference, you get…

17:04

When you get an interruption to your training time, it can be quicker for recovery. You might have seen all those little notes that they had presented. They were basically talking about those three points. Memory bandwidth, network bandwidth, and a compute at the center, all of that within the Cloud Matrix architecture.

17:21

And they actually had a model of the Cloud Matrix 384 in the room. They didn’t let us take pictures of it but was actually there. I’ll put a picture in the show notes. I got a picture of one of them, not a model, but the real thing at a conference a couple months ago. I’ll put the picture in there if you’re curious what this big thing looks like. And it’s pretty huge. OK, so that was kind of number one. First takeaway, first lesson, Cloud Matrix 384 is a new AI native sort of architecture for compute.

17:51

The next one, AI native storage, which I mentioned. It’s funny, nobody ever talks about storage. It’s not that exciting, but it’s everywhere. It’s in every computer, it’s in every phone, it’s in every smartwatch and whatever. yeah, it turns out when you switch from traditional compute to AI-based compute, generative AI, yeah, the data requirements.

18:16

change dramatically. One, you just need a tremendous amount of data, far more. The nature of the data is different. It’s not structured data and numbers in a spreadsheet. It’s photos and images and recordings and, you know, it’s what they call unstructured data. And then in between you have sort of quasi-unstructured data, unstructured, but mostly it’s unstructured. The vast majority of it is. It has to move around with tremendous volume and speed.

18:46

you’ve got this massive pool of data now in your storage, it’s got to get to the CPUs and the GPUs very, very quickly, which it turns out you need tremendous bandwidth. the nature of the storage is much more about how fast sort of the reading is and writing process in and out of the storage as opposed to.

19:08

Traditionally memory, the thing you might care about was if I’m going to put something on a solid-state drive in my laptop or server, I need to make sure that it’s secure. I need to make sure it doesn’t degrade so that two years from now that memory is still accurate and preserved. So solid state drives in particular, they’re very good at preserving data for the long term. They’re not that good for speed of data in and out.

19:34

But when you’re doing generative AI, we don’t really need to preserve all of this data. In fact, a lot of it’s going to be disposed of. The important thing is keeping this river of data flowing between the outside world into our storage, into the computer architecture, and then back into the storage where it may need to be rewritten and such. So, it’s much more like, that’s how I view it, like a river. And you got to keep the river going. So.

20:04

AI native storage is this idea of that you’re to have to redesign storage architecture from the bottom up to meet these weird demands of AI workloads. And you really can’t just adapt traditional enterprise storage. No. So AI native storage is really what we’re doing is we’re embedding intelligence into the storage itself. And then we’re putting on high speed data access. m

20:30

So, what do you need within all of this? Well, first thing you need is sort of very low latency and extremely high performance. So, you need the high throughput, lots of reading and writing, huge amounts of data into the storage, often in very sort of large parallel chunks. So, you need sort of this sustained high bandwidth data delivery. And if you don’t have that, then you’re

20:57

your GPUs, your accelerators, they’re not being fully utilized. You want the utilization of your very expensive chips to be like maxed out all the time. Well, for that to happen, you’ve got to have these rivers of data moving in and out very quickly. So, I just low latency is a big deal. um There’s this idea of putting AI within the storage itself. that if you, they call it AI ops for storage. If you put,

21:25

AI and machine learning algorithms that you want them to actually manage the storage itself. You want it to be moving files around. You want it to do predictive maintenance. You want sort of what they call intelligent data placement and caching.

21:40

I would say caching, caching. uh So yeah, you kind of want your, basically your drive, your storage drive to get smart and start to sort of dynamically optimize itself against the demands. And then the third bit is you have sort of again, elastic scalability, parallelism. You want these storage devices to be able to grow quite easily like the…

22:07

sort of the peer-to-peer architecture for compute, you want the same thing in the data. So horizontal scalability, moving things in parallel form, not all serial processing and so on. Anyways, I’ll put a slide in the show notes. Actually, I’ll put two. I’ll put a slide about how AI native storage is different than traditional enterprise storage and also put one for the compute I talked about. Anyway, so.

22:34

When we were walking around, that was really the second area for those of you who are there. We walked into the left, there was all the compute, the Cloud Matrix stuff. Right next to that was all about storage. That wasn’t there a couple months ago. So that’s interesting that they’ve started working on this. And I’ve heard their executives talk about this in comments over the last couple months. So yeah, that was new. It got my attention.

22:58

Okay, bucket number three, these are going to be shorter, we got two more. number three, I won’t go through too much, which is just the foundation models. What are the big components? Well, it’s the compute, it’s the storage, it’s the models, and it’s the databases. Those are the four things. um I’ve talked about this before, the sort of foundation models, which for Huawei is called Pangu.

23:24

For Alibaba it’s Quinn. mean everyone’s got their names. Paddle paddle. They’ve all got these names. But I always liked how Huawei talks about this where they talk about the L0 level which is your large foundation models reasoning.

23:39

GPT like models, multimodal models, scientific models are emerging and so on. Then L1 is sort of, you know, they make those industry specific where they adapt the foundation models into industry specific models. And then L2 is even more specialized, more to scenarios, things like chat bots for customer service and whatever. So, they have kind of a nice framework for how to think about models and they’re building across the board. But their adoption, obviously,

24:09

they’re not as widely adopted by developers, which is a, you know.

24:14

They’re much more of a hardware maker today, but they could be a software and a foundation model in the future. They’re in the running, but they’re not like DeepSeek or Alibaba yet. So, the Pongu models are interesting, and then they have their platform for how to create these things, ModelArt Studio. Interesting, I won’t go through that because uh we’ve talked about it before. okay, models are a big important deal to understand. And then the last bucket is uh DataArts, which is really this

24:44

data AI convergence thing. That’s really, I’ve been thinking, this is kind of the thing I’ve been thinking most about in the last couple weeks. This idea of we’re starting to combine data and databases, not storage, which is the hardware, data and databases, which is the software. We’re starting to combine that with AI itself. Now, why would you do that?

25:10

I listened to a podcast the other day and they were talking about the same convergence within enterprise databases. And the guy who was on the podcast, he had a really good summary. And he called it like the goal for every enterprise before you can do anything with AI apps, whatever you dream of doing, you have to basically have AI ready data. That was his phrase, AI ready data.

25:36

which I thought was a great way to think about it. It has to be in a form that it can go directly into the GPUs and the compute to generate text or whatever it’s supposed to do. And the path from getting data from the world, data from your business, data from everything to the AI ready state is pretty difficult.

25:59

and it’s tremendous amount of work. I describe it as a river. That’s not really true. It’s more like a river with 10 big water processing plants stuck right in the middle of the river. And you got to get the water through those so they can come out the other side and then the river becomes usable.

26:18

So, here’s the list this guy was talking about. He said, understand for enterprise data, know, most data is unstructured. It’s voicemails, its meetings, its written reports, some of it’s in Excel spreadsheets, most of it’s unstructured. What do you do with it?

26:34

He basically says, look, you got to get it ready for rag. Most generative AIs retrieve a log minute generation. You got to get in the right form for that type of uh generative AI activity. Here was his list. You have to gather the data, which is a process that never stops, especially if you’re pulling it from the outside world, from customer behavior. You’ve got to extract the data that’s useful within the sea of data.

27:00

You’ve then got to sort of extract the semantic knowledge within the text or whatever you have. Like most data is of no value. You’ve got to sort of pull the knowledge that matters out of the random data. You know, the signal for the noise. You’ve got to chunk that knowledge-based data up into homogeneous sizes that can be managed and manipulated.

27:25

Then you want to enrich those chunks with metadata, tagging, labeling, so you can search for them, things like that. uh Then it gets interesting. Then you need to embed it. And this is the part where, like I had to watch a lot of videos before I understood this. You know, we have, ultimately it all has to be in numbers.

27:46

It all has to be numbers because ultimately this is all math. So, you have to get it into a form that the GPUs and CPUs can do mathematical calculations on it. So, you’ve got to convert knowledge, text, images, sounds, all of it into math. Well, that’s embedding. So, you transform to a numerical representation of the information, the data, the knowledge in a way that can be stored and searched.

28:14

That’s kind of, now we’re talking about a database. And the last step is then we got to index it within a vector database. know, a vector database is for those who aren’t familiar.

28:25

they basically capture knowledge by saying this word cat is similar to the word dog and it is more similar to the word dog than it is to the word table because cats and dogs are somewhat similar in that they’re both animals, they’re both mammals. Right? So, vector data places put things in spatial orientation and these are just strings of numbers, vectors. And that’s how you capture sort of capture knowledge. The distance between various vectors tells you how

28:55

or different they are. That’s how you can search for things and do things. Anyways, all those steps have to happen before the data is sort of AI ready data. So those are the power plants, the processing plants that are stuck in the middle of the river that everything has to go through before it can be used. And that’s a big deal. And when you try and do this at large scale, because we’re talking about huge amounts of data, that’s a problem.

29:24

It also turns out that the data is growing and growing. Your database keeps growing. So, you got to think about the data velocity. How fast is this data coming in? And then also the existing data you have in your databases, how fast is that changing? You know, we have to rewrite and change things. So, we have to take in new data all the time and we have to change the databases we already have all the time.

29:53

So that’s basically data velocity. Now that doesn’t really fit the river analogy, but I think it’s kind of close. Anyway, so that’s where this idea of how we convert uh this data AI convergence is sort of to solve that problem. Because right now it’s a lot of data scientists doing all these steps. We want AI and AI agents to take over this process of getting all this data into an AI ready form.

30:19

That’s kind of the idea. I that’s my simple explanation of it. those of you who are data scientists are probably gritting your teeth at that explanation. ultimately, I’m a software and a business guy. So, my knowledge taps out at a certain point. oh, So yeah, that’s the idea. So, in the Huawei exhibition, you would have seen an area called Intelligent Data Management, also called AI native storage.

30:44

Where basically you want to have AI and AI agents do all this processing. So how do you do that? Well, you take a GPU out of You know the central core and you stick it in the storage

30:59

So, the storage device where all the data sits has GPUs in it now. You want the AI and the AI agent in the storage device. You don’t want to send the data from storage to the chips and have it done there and then send it back. That’s very efficient. You want to have it done all within the storage device. So anytime the processor, the accelerator need the data, it can come out of the storage all ready to use.

31:28

You don’t want it to go from storage to the CPUs and GPUs, have to get cleaned and used properly, and then it can be used. No, you want it to come out of the storage, done. That’s the idea. So, what does that mean? Well, that’s AI native storage. That’s the convergence. It’s got to govern the data. It’s got to curate the data, all those steps. And uh I’ll put it in a little slide about the benefits of conversion, which is accuracy, cost reduction, speed and agility and all that.

31:56

And then you got things like security, which is a problem. So that’s kind of the fourth one I wanted to sort of bring up. And they actually have a database uh program there called GaussDB, which they’ve been talking at a lot. Instead of using a Salesforce database, you could use a Huawei GaussDB system. uh Okay, they’re basically putting AI into that software. Anyways, those were sort of my four big buckets to think about.

32:26

The compute architecture, Cloud Matrix 3D4, very interesting. uh the sort of AI meets storage, the idea that the storage devices are going to get smart, the foundational models, the LLMs, and then this idea of data AI convergence, and that databases are going to have agents operating within them, fixing all these steps and getting everything AI ready. uh the last bit.

32:53

which I’ll go through quickly is they did have a little bit about their data centers there. If you saw those big racks, when they show data center racks, they always have glowing tubes on them to make them look cool. I’ll put in a slide of uh one of these racks if you didn’t see it. They’re actually pretty cool. That’s where all the chips go in the racks, the racks of the server, and then they put all the cooling.

33:16

The cooling is actually kind of interesting because, know, when you go to any generative AI computing generates a lot of heat because you have a lot of chips. But it’s even more if you’re doing this China version where Nvidia uses far fewer chips because they’re more powerful. Huawei and most of the China players, they’re stitching together 384 chips in this case to match the performance. But that also means you’re going to generate a lot more heat.

33:46

you’re going to use energy and two you’re going to generate more heat which means you need more water cooling. You can put a fan in a laptop and cool it down but these servers need water. So, there’s a couple models, I don’t know if anyone saw them at the center, there’s a couple where you sort of put tubes.

34:04

that lay across the circuit boards with water flushing through it all the time to keep it cool, to soak up the heat. There was one version there where you actually have chips and circuit boards. Basically, the whole server rack goes underwater and the whole thing’s just designed to be underwater. And forget the tubes, let’s just dunk the whole system.

34:25

or at least the Circle Board and all that. So, there was a model of that there, which is pretty cool. Anyways, and then they do data center operations and management, which is a pretty interesting business actually. So anyways, that was the last bit. And then lots of use cases all throughout there, which are pretty fun. Anyways, that is it for this week. I uh hope that’s helpful, interesting. I thought it was fascinating. I took a ton of notes trying to find out where the state of all this stuff is.

34:55

as I kind of said, for me the rubber hits the road in all of this in terms of business strategy. What can we do as a business? Well, that comes down to at least two things. What can this technology actually achieve reliably such that we can put it into a service, an app, the capabilities, and then two, what is that going to cost me? Well, the biggest component of the cost is the AI architecture, basically.

35:24

A lot of people are going to be doing this with contracts with cloud providers to access everything I just mentioned. Some will do it locally. Bigger businesses will do that locally. You can also download a lot of this now. And then you have a human component, a labor, which can be significant, which was part four of these articles was about how the cost structure of these services can stop looking a lot like software and can start looking like a human service.

35:54

business. And it really depends how difficult of a question you’re dealing with in terms of the AI. So that cost bit is important. That’s where I’m trying to get to with all that. And then out of that, once you know the cost structure and the capabilities, you can come up with moats and a competitive strategy and a business strategy. That’s where I’m going with all this. I’m not quite there yet, but I’m getting there. Anyways, that is it for me for this week.

36:22

I that’s helpful. Those of you who went on the trip, I hope you had a good time. I had a great time. uh We saw a lot of cool companies. Huawei, Xiaomi, Tencent, Insta360, I thought was really neat.

36:35

the stores, the lectures, machine, like robots, someone from Pop Mart, that was pretty fun. We ride, yeah, I thought that was great. So anyways, I’m taking a bit of a rest this week. I was pretty burned out at the end of all that. yeah, for those of you who are subscribers, I owe you a couple articles I sort of fell behind last week. And the four articles I just mentioned about understanding AI architecture, they’re all there already, so you can look at those. But I owe you a couple articles. oh

37:05

back up this week. Anyways that is it for me. I hope you’re all well and I will talk to you next week. Bye bye.

———-

I am a consultant and keynote speaker on how to increase digital growth and strengthen digital AI moats.

I am the founder of TechMoat Consulting, a consulting firm specialized in how to increase digital growth and strengthen digital AI moats. Get in touch here.

I write about digital growth and digital AI strategy. With 3 best selling books and +2.9M followers on LinkedIn. You can read my writing at the free email below.

Or read my Moats and Marathons book series, a framework for building and measuring competitive advantages in digital businesses.

Note: This content (articles, podcasts, website info) is not investment advice. The information and opinions from me and any guests may be incorrect. The numbers and information may be wrong. The views expressed may no longer be relevant or accurate. Investing is risky. Do your own research.