In Part 1, I went through some of the craziness in AI data center building.

For the past twenty years, digital strategy has had a pretty static and stable infrastructure. There is Apps, Database, and Compute. And my focus has been on the first two.

- How do we use this app or digital service in our business?

- What kind of database is required for this?

But I rarely talk about the CPUs, storage and networking. The infrastructure level. It’s pretty standard for traditional CPU-based compute.

But GenAI means adding foundation models to the architecture. Now we are talking about AI infrastructure.

This means shifting out of deterministic traditional compute, where each routine is performed the same way each time. Query a database ten times, you will get the same result.

AI compute is generative and probabilistic. Ask ChatGPT a question five times and you get five different answers.

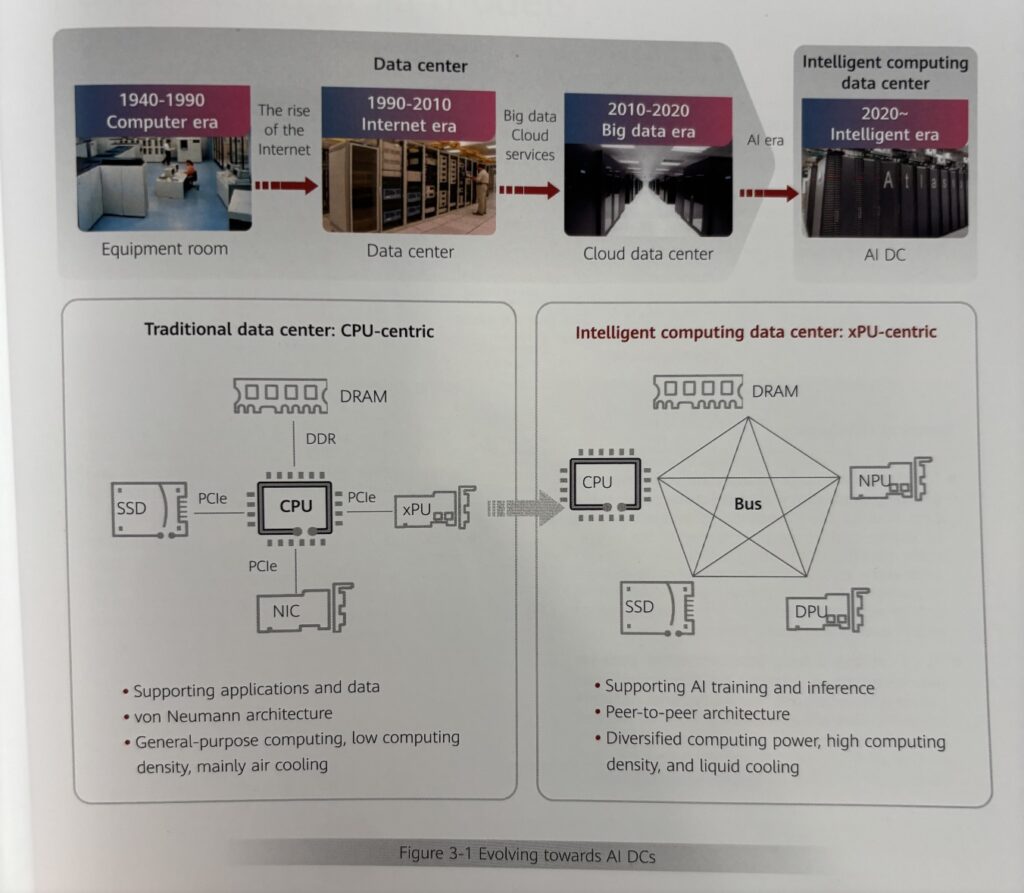

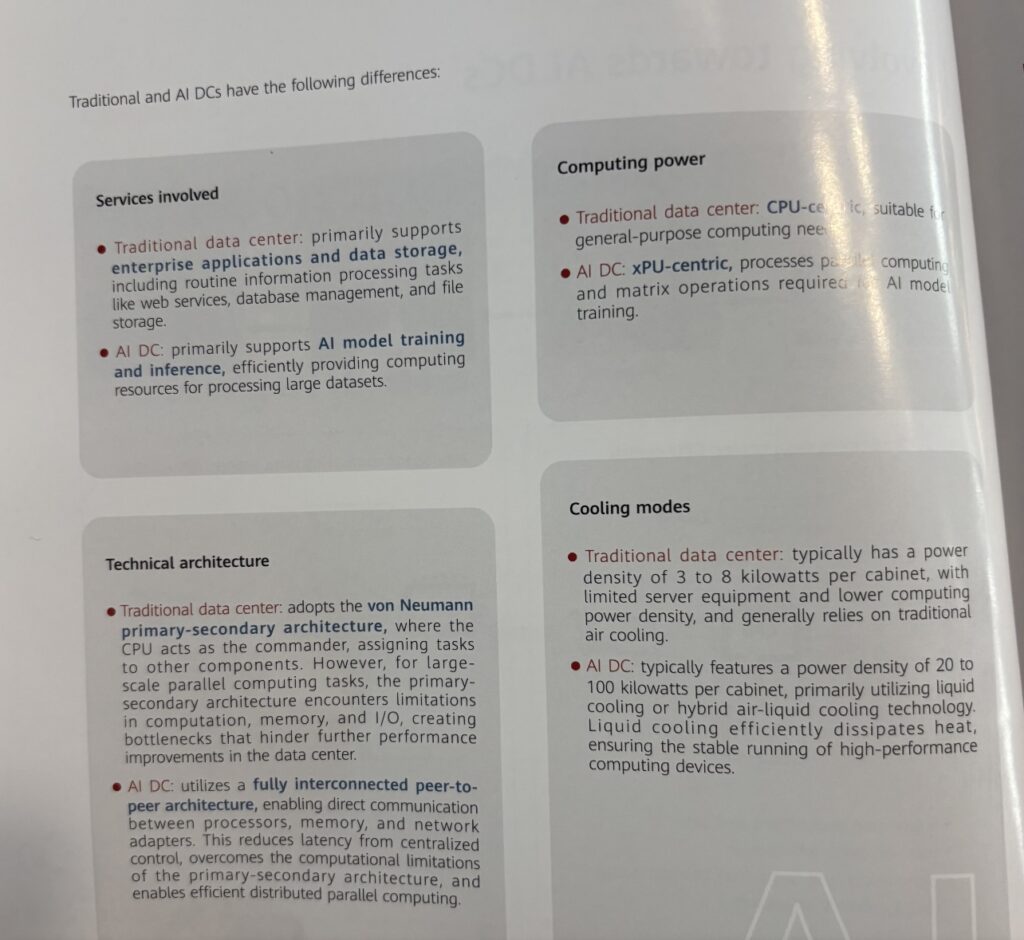

The workloads, compute requirements and architecture for foundation models are just different than for traditional software. Here’s how Huawei describes this in their AI Data Center Whitepaper. Note: These pages are worth reading in detail.

That is a good description of the evolution of data centers.

Here is a good summary of the key differences between traditional and AI compute.

A Simple Analogy for Traditional Compute

Traditional compute based on CPUs is like a librarian who is given instructions to retrieve 5 specific books. The librarian uses a catalog and then locates the 5 specific books in the library. The books are delivered as requested.

In this analogy, the librarian is the CPU. The library is database.

And this is a structured, deterministic process where the system retrieves or processes data based on explicit rules or predefined logic.

Traditional software does this type of process all the time, when a database is queried or a script is run. And it produces a repeatable output. Think creating an Excel spreadsheet. Or retrieving a video to watch.

Traditional compute is linear, deterministic and rules based. And the mathematics used is mostly addition, subtraction, division, multiplication.

Traditional compute requirements include:

- Hardware: General-purpose CPUs (e.g., Intel Xeon) in a standard server.

- Workload: Sequential processing, low parallelization. For example, a single-core CPU at 3 GHz can handle the task in seconds to minutes, depending on data retrieval speed.

- Memory: Minimal, typically <1 GB RAM for text assembly and database queries.

- Example Compute: ~0.1 teraflops (TFLOPS) for basic data processing and text generation.

A Simple Analogy for AI Compute

AI compute is like tasking 50 writers in a library to write a new article. The writers have a lot of knowledge in their heads that they have learned from the library content. And they can go out into the library quickly to grab snippets of books. But they mostly sit at a table and work collaboratively to create the new article. And they adapt it to fit the request.

In this analogy, the writers are the pre-trained models, that have learned from datasets (i.e., books in the library). There are way more steps than in traditional compute. And they are happening in parallel (i.e., lots of editors working together). And because the generated content is new, it will be different each time.

This is probabilistic, neural network-based processing to create novel content. And the math is mostly matrix multiplication.

AI compute requirements include:

- Hardware: Specialized GPUs (e.g., NVIDIA H100) or TPUs, often in clusters. A single H100 delivers ~4 TFLOPS FP16 for inference.

- Workload: Highly parallelized matrix operations for transformer model inference. Generating 500 words might require ~10-100 billion FLOPS, depending on model size and optimization.

- Memory: Significant, e.g., 80 GB GPU memory for a 70B-parameter model, plus system RAM for data handling.

- Example Compute: ~1-10 TFLOPS for inference, orders of magnitude higher than traditional computing for this task.

Note the last bullet point. AI requires 10x the compute.

That’s not a perfect analogy. But it’s directional correct.

***

Ok, I’ve covered data centers and a bit about AI compute. Let’s jump back to what matters for business.

What Matters for Business (A GenAI Playbook)

It’s easy to spend a lot of time digging into data centers. It’s fun.

But the rubber hits the road for businesses in how GenAI impacts the customer. That’s where the tech most directly turns into value.

And keep in mind, most tech-created value ends up going to the user. Not to profits or shareholders. For example, I have been amazed at how fast AI generated videos are advancing. But it’s also starting to look like a commodity. Try Sora, Veo and Grok Imagine. They all work pretty well and are close to free. Most of the value is going to users. It’s starting to remind me of Spellcheck.

I was recently at the Tencent Cloud meeting in Shenzhen. And I noticed that tons of Tencent products had added the phrase “Plus AI”. So Tencent Docs was “Docs + AI”. Tencent Meeting was “Meetings + AI”. They are baking GenAI into all their products.

And we see this everywhere.

- Instagram has added AI.

- Google Earth has added AI.

- OpenAI has just announced a new browser with AI called Atlas. Similarly, Microsoft Edge has added Copilot mode. And Chrome browser has added Gemini.

- Also, this past week, Adobe has unveiled that every product is adding GenAI, with the user interface becoming a conversation. You just tell Photoshop, Premiere, or Express what you want to do, then take over at any step when its faster to do things yourself.

So, that’s the first step in turning all this new tech into value. We start with putting AI into the existing products and services. Plus, creating entirely new GenAI products and services.

The second source of value (from GenAI) is improving internal productivity. That’s deploying productivity tools (everyone using ChatGPT). But it’s also trying to improve operating performance across the board. Not just getting cheaper and faster. But also getting better performance.

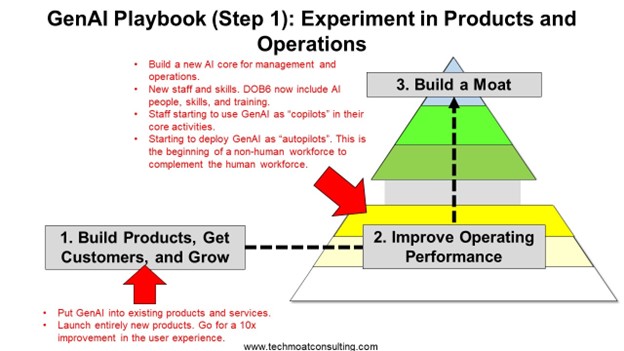

Those two areas are pretty much my GenAI playbook (Step 1), which I wrote about last year.

It’s:

- Upgrade your products. Think “Apps + AI” and “SaaS + AI”.

- Also try to make new 10x products.

- Increase internal productivity and performance.

We are also now seeing a broader approach to use GenAI (and Agents) to restructure workflows and operations. I call this the GenAI / Agentic Operating Basics. I wrote about that here.

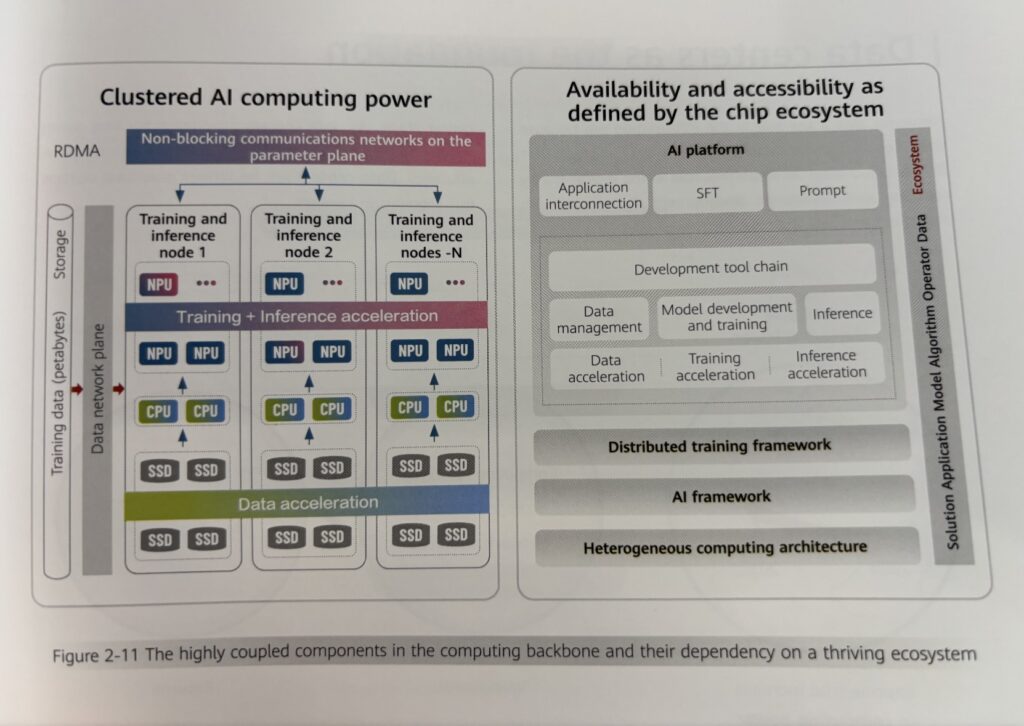

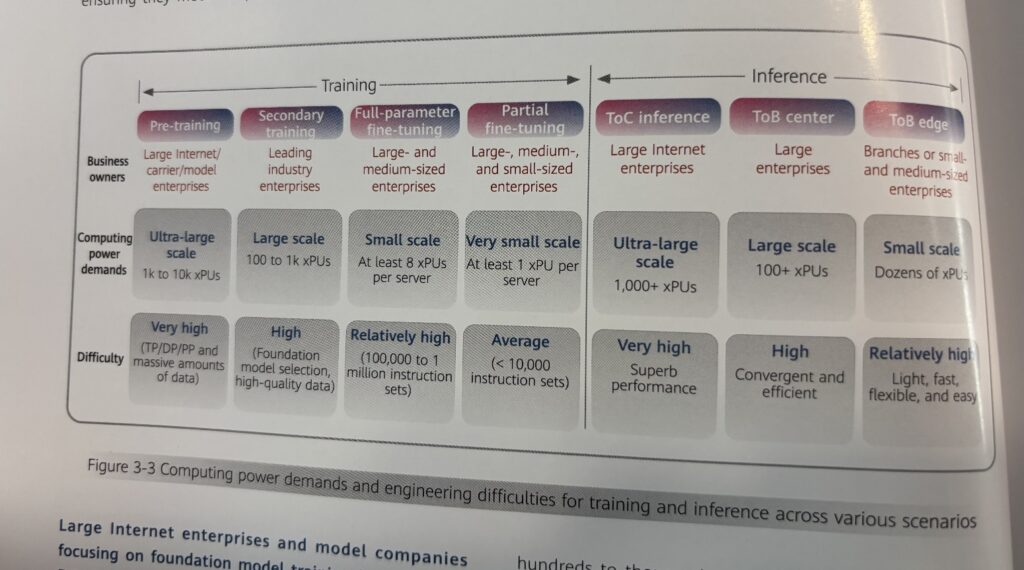

Ok. That’s most of what I wanted to cover in Part 2. Here are two good graphics on the AI tech stack.

That’s it. Cheers, Jeff

- Understanding AI Infrastructure Part 1: AI Data Centers (Tech Strategy)

- Understanding AI Infrastructure Part 3: GenAI Operating Costs (Tech Strategy)

——

Related articles:

- Two Lessons from My Visit to Tencent Cloud (1 of 2) (Tech Strategy)

- Tencent Cloud and Mini Programs Go International. Lessons from My Visit to Tencent HQ. (2 of 2) (Tech Strategy)

From the Concept Library, concepts for this article are:

- AI Infrastructure and AI Data Centers

- GenAI and Agentic Strategy

From the Company Library, companies for this article are:

- n/a

——–

I am a consultant and keynote speaker on how to increase digital growth and strengthen digital AI moats.

I am the founder of TechMoat Consulting, a consulting firm specialized in how to increase digital growth and strengthen digital AI moats. Get in touch here.

I write about digital growth and digital AI strategy. With 3 best selling books and +2.9M followers on LinkedIn. You can read my writing at the free email below.

Or read my Moats and Marathons book series, a framework for building and measuring competitive advantages in digital businesses.

This content (articles, podcasts, website info) is not investment, legal or tax advice. The information and opinions from me and any guests may be incorrect. The numbers and information may be wrong. The views expressed may no longer be relevant or accurate. This is not investment advice. Investing is risky. Do your own research.